Character ranges and encodings

Lexer operates on characters and requires character-oriented input. Typical source of data for lexer are byte-oriented streams or files. Character data in such streams is stored using particular encodings, which differ in supported character range, and method of storing characters in underlying bytes. To use such byte-oriented data as input for lexer it must be first decoded.

Decoding can be done using standard library methods for handling input streams. In such case input is converted to stream of characters and can be fed directly to lexer.

Handling character-oriented data is easy with modern languages like C#, having built-it data types for storing characters. Underlying storage for such character types has form of two-byte words. Such storage format dates back to early days of Unicode standard, which used characters from two-byte (16 bit) range. Since extension of Unicode standard to 20 bits single character no longer fits into a two-byte word. If character storage used is two-byte oriented, characters that stand out of 16 bit range must be encoded using variable encoding. This means that reading characters from byte-oriented stream and saving them in two-byte words does not really decode characters. It merely recodes them to another standard. Analyzing characters contained in such two-byte words requires decoding them again which is inefficient.

Lexers generated by Alpag can be configured to read input in either of ways:

- byte-oriented. In this case Alpag handles decoding input format. This approach is preferred for performance reasons.

- character oriented. This method can be used when input data is already loaded into memory and stored as sequence of two-byte characters.

Internally lexer stores characters using four-byte double words. Decoding both byte-oriented and two-byte oriented data to this internal format introduces some overhead.

Encodings

Lexers generated by Alpag, when reading byte-oriented input, can process following input encodings:

name | storage | character range | description |

ASCII | 7bit (1 byte) | 7 bit (0..127) | standard ASCII |

ASCIIRAW (ASCII using 8 bits) | 8bits (1 byte) | 7 bit (0..127) range 128..255 can be transferred transparently | ASCII extended to 0..255 range |

ASCIICP (ASCII with code page) | 8 bits (1 byte) | depends on code page | one-byte encoded national character set |

UTF-8 | 20 bits (1 to 4 bytes) | full Unicode | byte-oriented variable length encoding |

UCS-2 | 16 bits (2bytes) | Unicode BMP Plane (0..65535 range). surrogate codes can be transferred transparently | Traditional fixed two-byte Unicode encoding supporting Basic Multilingual Plane |

UTF-16 | 20 bits (2 or 4 bytes) | full Unicode | Modern variable two-byte Unicode encoding |

Alpag can be configured to generate lexers that:

- read byte-oriented input, character-oriented input, or can be switched between these two formats

- support all above encodings, only a subset of them, or just a single encoding. In last case lexer has a fixed, non-switchable input encoding

- can recognize encoding of input file. Several options control what method lexer uses to guess encoding of input file.

Normally lexer is configured to support all encodings that can appear on the input, but sometimes a simplified approach can be taken. Choosing to support particular encoding means that all characters in this encoding will be properly converted to full scale (32bit) codes. If lexer has no specific rules for all characters, there may be no point in analyzing them.

UTF-8 is an example of a byte-oriented encoding, backward compatible with ASCII. Characters outside of ASCII appear as bytes in 128…255 range. It is possible to transfer such bytes transparently without interpreting them as Unicode characters by using ASCIIRAW format. Such approach requires some caution though. User must be sure that no lexer rule cuts a multibyte UTF-8 character 'in half'.

Similar optimization is possible for UCS-2 / UTF-16 pair.

Format and encoding of lexer output can be also configured. Usually lexer output is set to native string format used throughout the application and so can be made fixed (not switchable). However, if application is tunneling data from input to another output stream, ability to use custom output encoding may come in handy.

Options controlling input encoding are in Lexer.In group.

Options controlling output encoding are in Lexer.Out group.

Lexer character range

One of key lexer configuration parameters is character range. Character range specifies maximum character code lexer can handle.

Lexer character range calculation is based on following assumptions:

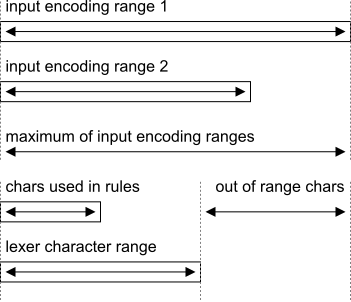

- by default character range is calculated so that it covers ranges of all input encodings specified for lexer. That is character range is wide enough to cover any character that can appear on input.

- Lexer character range always includes all character codes that were explicitly specified in lexer rules. For instance: if any lexer rule contains Cyrillic letter 'A' (code 0x410) character range is at least 0 to 0x410.

- catch-all regular expression elements like . (any char) do not modify lexer character range. Negated character classes like [^a] (any char but 'a') do not modify lexer character range either.

User can explicitly specify lexer character range narrower than range of input encodings. It is not possible though to make it narrower than range of characters used in lexer rules.

When lexer range is narrower than range of input encodings, some characters read from input may stand outside of lexer range. Such characters are called out-of-range characters (OOR characters). Out of range characters, before passing them to lexer, are converted to a special OOR symbol. Lexer rules can contain special symbol \! (escaped exclamation mark) which matches this OOR symbol (and any out-of-range character).

Note that conversion to OOR symbol is done only for the purpose of processing inside lexer engine. Original characters read from input remain intact. When lexer reports a match, matched text contains these original characters. All characters can be transferred verbatim from input to output, even if lexer automaton does not explicitly recognize them.

Illustration of character ranges and their relationships is shown on Fig. 7. Presented example shows lexer character range which was manually configured to be narrower than sum of input ranges, resulting in nonempty OOR range.

Lexer ranges calculated by Alpag are, by default, rounded up to nearest well known range limit like 8bit, 16bit or 20bit. You can manually set lexer range to any explicit value.

When lexer rules contain named character ranges like [[:Letter:]] characters in these ranges add up to summary range of characters used in grammar. When named range extends to entire Unicode it may be impossible to narrow down lexer range. In such case named character range should be trimmed using set operators to required subrange like this:

Options controlling lexer input range can be found in Lexer.Range group.

Some options related to OOR characters are also present in Lexer.Regex group.

Expression elements dependent on range

Certain regular expression elements refer to the concept of 'all characters'. These are:

- . (dot) symbol, matches any character

- [^characterList] – negated class, matches any character except those on characterList .

Behavior of these elements depends on several options.

EOLs

The . dot symbol by default does not include EOL symbols.

To match any character including EOL symbols an expression [.\n] must be used.

Negated class like [^a-z] matches any character not in a-z range, including EOL.

This default behavior can be modified using 's' (single line) option:

Inside block with 's' option turned on, any occurrence of . matches also EOLs.

OORs

By default both dot symbol . and negated class [^...] do not match Out of Range (OOR) characters. This behavior can be modified using 'x' (out-of-range) option:

Inside block with 'x' option on, any occurrence of . matches also OORs.

Moreover if option Lexer.Regex.RangeIncludesOOR is set to true dot symbol . and negated class [^...] match OOR characters by default.

OOR characters can be also explicitly matched using \! escape. Expression [.\!] matches also OORs regardless of other settings.

Details of range handling

Range handling depends on EOL handling mode set for lexer. Additional explanation of its principles is provided below. Examples given here assume that:

- lexer range was explicitly set to 0..0xFFFF range.

Providing that input encodings include entire Unicode, this leaves characters in 0x10000..0x10FFFF range as Out of Range (OOR) characters. - two newline escape sequences were defined: CR LF (\x0D\x0A) and LF (\0xA).

Lexer will process EOL symbols when option Lexer.Eol.Mode is set to either Symbol or Chars.

When EOL mode is set to Symbol, newline sequences (CR LF and LF) are converted to special EOL symbol. In such case:

- dot . symbol by default matches (0..0xFFFF) range (which does not include EOL). Code LF (\x0A) is present inside this range, but it never appears on input, since it is always converted to EOL. Code CR (\x0D) can appear when not part of CR LF sequence.

- inside (?s:...) dot . symbol matches (0..0xFFFF,<EOL>) range which explicitly specifies also EOL.

- inside (?x:…) dot . symbol matches (0..0xFFFF,<OOR>) range which includes also OOR.

- inside (?xs:…) dot . symbol matches (0..0xFFFF,<EOL>,<OOR>)

When EOL mode is set to Chars, codes CR and LF are passed to the lexer untouched. Occurrences of \n escape are replaced by regular expression matching (\n|\r\n).

Handling 'entire lexer range' is simplified in this mode: codes that appear anywhere in EOL sequences are simply removed from default lexer range without paying attention to exact format of EOL sequences. This results in following behavior:

- dot . symbol by default matches all characters in lexer range except codes CR and LF. Both CR and LF are left out. This means that alone CR will not be matched, even if it is not itself declared as standalone EOL sequence. Effectively the range is: (0..0x09, 0x0B..0x0C, 0x0E..0xFFFF).

- inside (?s:...) dot . symbol matches (0..0xFFFF) range which includes also CR and LF, regardless in what combination these appear. Note that single occurrence of . matches single CR or LF character. It will not match CR LF sequence as a single character.

- inside (?x:…) dot . symbol matches (0..0x09, 0x0B..0x0C, 0x0E..0xFFFF, <OOR>) range which includes also OOR.

- inside (?xs:…) dot . symbol matches (0..0xFFFF, <OOR>)